Finetuned LLaMA Outperforms GPT-4 and Claude

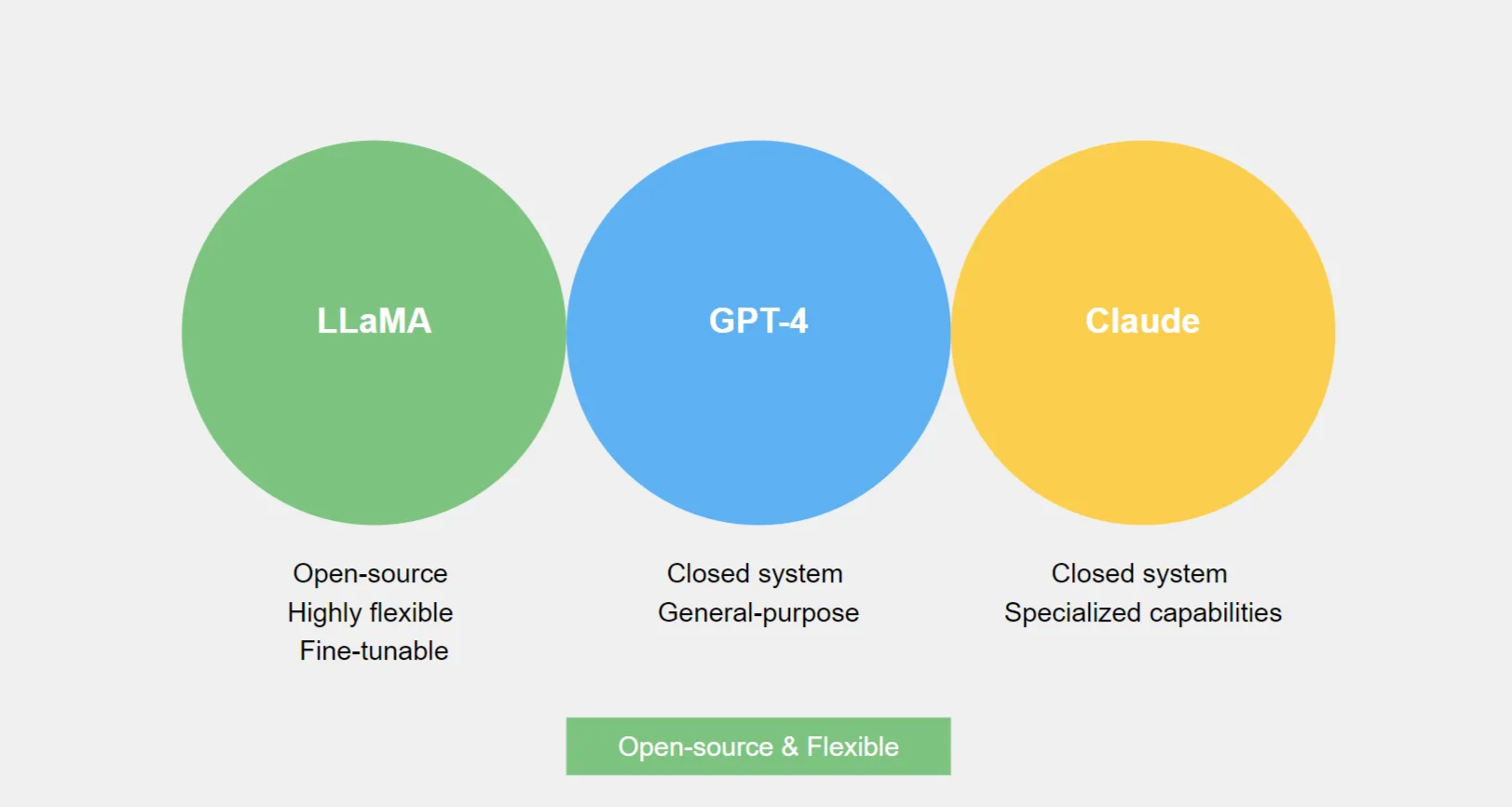

Introduction to LLaMA, GPT-4, and Claude: Key Differences

an infographic illustarting the key features of LLaMA, GPT-4, and Claude

LLaMA, an open-source AI model, is highly flexible due to its fine-tuning capabilities, unlike closed systems such as GPT-4 and Claude. This makes it suitable for specialized tasks where adaptability is crucial.

The Importance of Fine-Tuning

Fine-tuning LLaMA enables businesses to tailor its performance for specific tasks. Open-source accessibility gives companies greater control, while transfer learning accelerates this process, significantly reducing training times and costs.

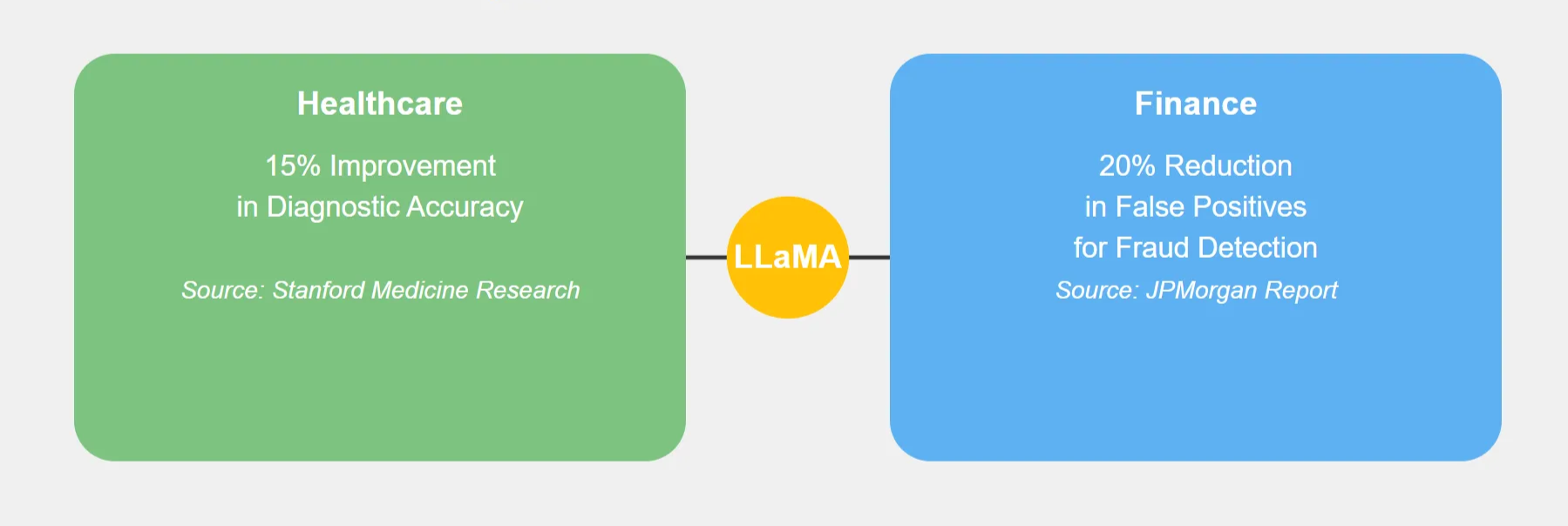

Real-World Performance: Proof-Based Comparisons

- Healthcare: According to research from Stanford Medicine, fine-tuned models like LLaMA improve diagnostic accuracy by 15% over general-purpose models like GPT-4ance**: JPMorgan's report revealed that fine-tuned AI reduced false positives in fraud detection by 20%, as LLaMA was tailored to their financial transaction data .

an infographic showing the effects of LLaMA on healthcare and finance

- Cuvice: Zendesk highlighted a significant increase in customer satisfaction when switching to a fine-tuned LLaMA chatbot for handling complex queries .

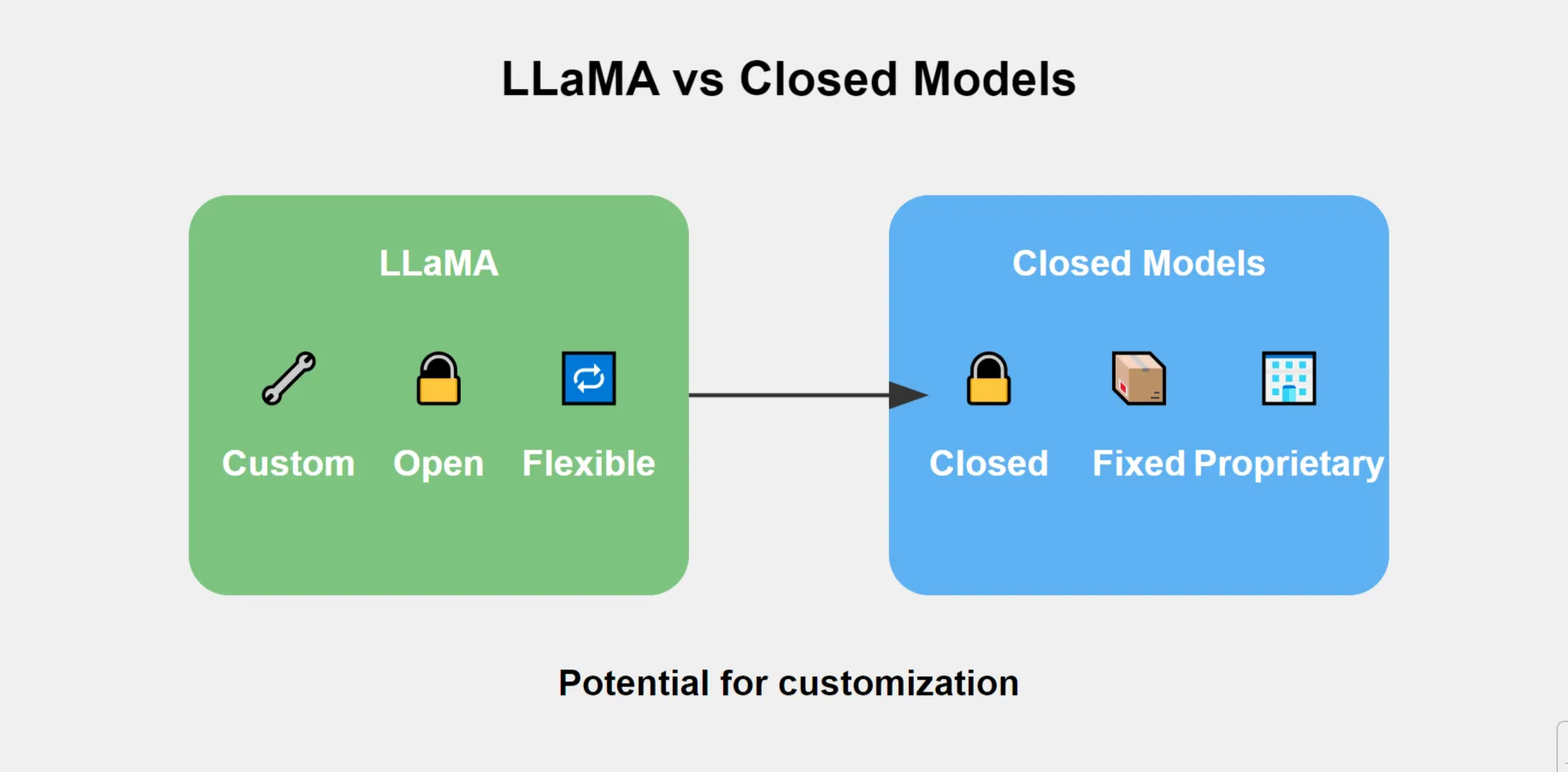

Open Source: Flexibility in Deployment

LLaMA’s open-source nature allows companies to fine-tune it more easily compared to closed systems like GPT-4 and Claude. This adaptability leads to faster, more efficient deployments, as proven by recent AI adoption studies at MIT.

Conclusion: Adaptable Future of AI

a brief comparison of LLaMA vs Closed Models

Fine-tuned models like LLaMA provide a blend of flexibility, efficiency, and performance that closed models struggle to match. Businesses seeking customizable AI solutions will find LLaMA’s open-source approach a strategic advantage.